Caylent Industries

SaaS & ISV

Revolutionize your SaaS and ISV solutions on AWS.

Learn some important technical and financial infrastructure considerations to maximize efficiency when building SaaS applications.

Current research points to the Software-as-a-Service (SaaS) industry being worth something between $200 billion and $800 billion USD, depending on where you draw the line on “SaaS”. It’s no surprise that startups and small businesses often look to launch SaaS products given this large market. Even established enterprises are transforming legacy products into SaaS applications. Successful SaaS products leverage product agility and operational efficiency as pillars of their business strategy. AWS is the platform of choice when building these products but to properly architect, build, and scale a SaaS application it’s important to recognize and understand the tradeoffs between various infrastructure decisions. In this article, we will walk through these tradeoffs and try to provide the context you need to evaluate, at a technical and a business level, which services work best for you.

First, there’s no “one-size-fits-all” blueprint for these solutions. That said, there are some common categorizations and decisions that every application faces. We can think about SaaS efficiency and optimization across the following core categories: tenancy, networking, compute, online transaction processing (OLTP), and online analytical processing (OLAP).

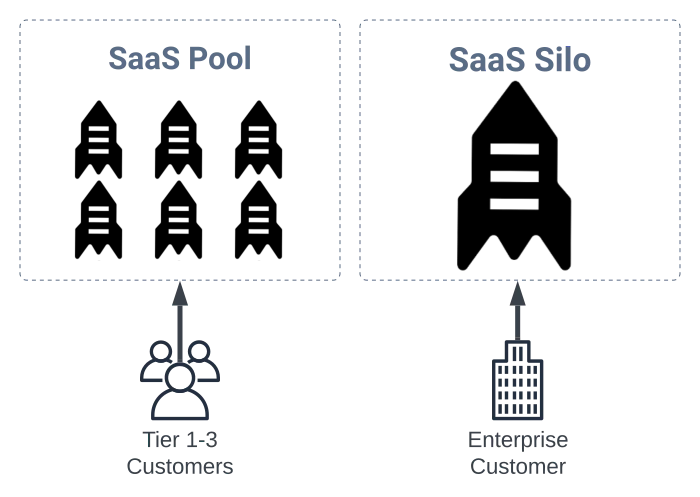

Tenancy is a discussion that merits its own blog post, but the AWS Well-Architected Framework SaaS lens does a good job of walking through things here. The TL;DR is that tenant isolation is often a desirable feature for discerning customers. The capability to deploy your application in a way that supports siloed tenants, pooled tenants, or something in between, lets you create a billing and cost model that scales extremely well. It can be hard to understand per-customer costs with a fully pooled model. In a fully pooled tenant structure, all of your customers are leveraging the same application stacks. By allowing large customers to break out into silos you can better manage cost, performance, and security for your larger or more specialized customers. These silos can even be unique AWS accounts per customer.

The easiest way to realize efficiencies in tenancy is to leverage infrastructure as code. If deploying your entire application stack is as simple as a terraform apply or cdk deploy you can automate onboarding, even for large enterprise customers that require their own silo. For pooled tenants, you can continue to maintain a simplified onboarding process and for bridge tenants, you can set up cells each one could map to.

Networking is another area SaaS applications can realize efficiencies. The first core networking service to consider is Amazon Route53, for DNS. Within Route53 you can leverage a number of features to improve performance and availability. Starting as simply as latency-aware or geo-aware routing or moving all the way up to extremely available solutions like Amazon Application Recovery Controller. If you couple solid and configurable DNS settings with a CDN like Amazon CloudFront, you should be able to meet most of the latency demands your customers have.

In some cases, DNS can become a part of your SaaS product strategy. You can offer custom domains or custom subdomains as a feature of your solution. With AWS Certificate Manager (ACM) you can even automatically provision and vend TLS certificates on behalf of those customers.

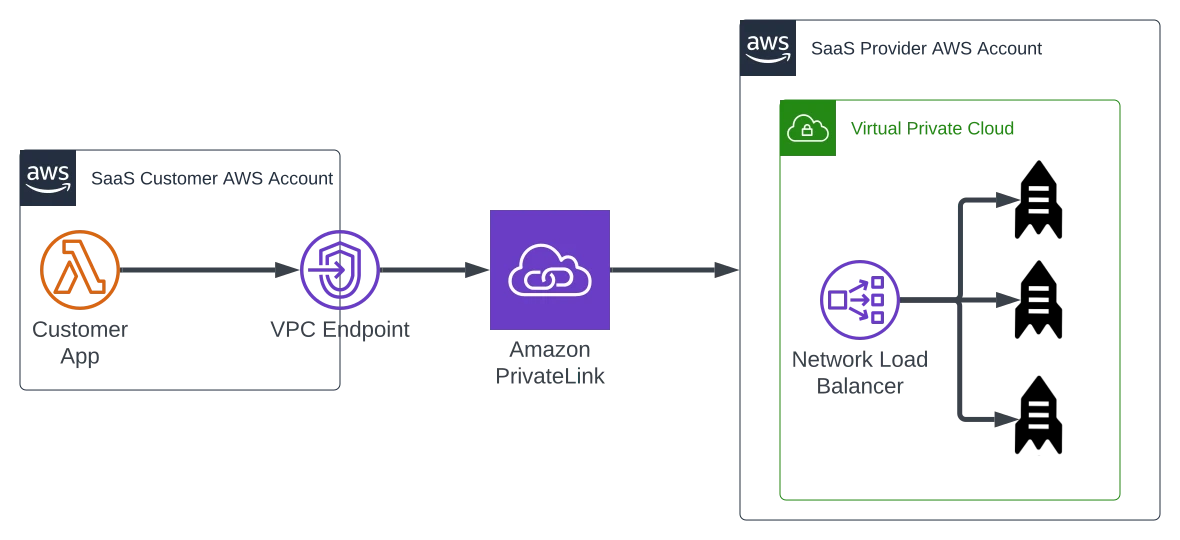

Looking beyond DNS, in the past, some AWS-based SaaS providers had to come up with creative networking constructs to fit their service into a customer’s existing networking. SaaS providers would offer VPNs or cross-account VPC peering to make accessing their services easier or more secure. While those methods still have valid use-cases for most workloads, you can now leverage AWS PrivateLink to offer connectivity directly into your customer’s VPCs – across AWS’s own network backbone. This is mutually beneficial because it gives you the opportunity to colocate your resources to the region or availability zone closest to the customer, minimizing networking costs. AWS Transit Gateway has also made it significantly easier to deliver complex networking stacks. Combining these two services is a killer feature for a large number of SaaS solutions.

One last networking concern that bears mentioning is IPv6. It’s now 2022 and IPv4-dependent solutions don’t have the longevity they used to. If you’re building a new SaaS platform that deals with low-level networking it absolutely must work with IPv6.

We interrupt this technical discussion to bring you some important advice on how to make informed cost decisions in your infrastructure. Work Backwards. There’s a common business metric called Cost(s) of Goods Sold (COGS). To build a successful SaaS business you must understand your COGS, add a healthy margin, and then use those metrics to drive your infrastructure decisions. Math doesn’t lie. By breaking down your delivery costs into unit economics, whether that unit is a user or a gigabyte, you uncover truths about your infrastructure that no amount of VC funding or growth metrics can overshadow. When we’ve worked with customers to build efficient SaaS applications, understanding their margins has unlocked deep thinking about product and infrastructure.

Now that we understand the economics of building SaaS applications let’s hop back over to the technical discussion.

For most, but not all, applications, compute is the dominating cost. AWS offers several different compute platforms that each scale and behave in various ways. At the lowest level, we have Amazon Elastic Compute Cloud (EC2) with some 400+ instance types and sizes for virtually any workload. EC2 is a fantastic platform for many solutions, and it has the best margin of any other compute offering from AWS, but there’s a DevOps tax to pay to be able to scale and operate on EC2.

Next, we have the container-based services Amazon Elastic Container Service (ECS) and Amazon Elastic Kubernetes Service (EKS). Containerization has a number of advantages over raw application deployments on EC2. First, containers are typically quite a bit easier to test and work with for your development teams. Second, containers often allow you to achieve higher utilization of your underlying compute resources. Finally, containers give you some composability and separation of concerns that are harder to realize when deploying applications directly on EC2. ECS doesn’t have any significant additional costs and works well for many workloads. EKS leverages Kubernetes to provide another useful abstraction layer, at the cost of some fixed infrastructure to support that abstraction. EKS has the additional benefit of the extremely large Kubernetes ecosystem. Deciding between ECS or EKS is a topic that warrants a deep dive of its own, so we’ll save that for another time.

Continuing along the spectrum of compute options we arrive at AWS Fargate. Fargate lets you run containers without having to manage the underlying compute instances. You provision a task by specifying the desired vCPUs, RAM, and container images – and AWS manages the underlying compute. Both ECS and EKS can use Fargate. While pricing varies by region, Fargate typically has a 20-40% cost premium relative to EC2. What does that extra spend get you? You can subtract the entire DevOps tax of EC2 from your application infrastructure. Whether this makes sense or not really depends on the application. Fargate can reduce your operational overhead but if it adds too much to your costs it may not be worth it. That’s why having a good understanding of your margins is so important.

At the end of the compute spectrum, we have one of my favorite AWS products, AWS Lambda. With Lambda we take away yet another configuration concern and only focus on RAM, provisioned and billed in “gigabyte seconds”. The concept of “serverless” didn’t really exist prior to Lambda. Serverless has absolutely transformed our industry since it came into practice in 2014. In the years since its release, Lambda has enabled companies to build SaaS solutions that have generated billions of dollars in revenue. These companies literally could not have been built on other compute platforms.

The advantage of serverless-based SaaS solutions is that the costs tend to scale linearly with the workload. If you’re building a greenfield solution, serverless compute options tend to be an excellent choice. There are a few exceptions around particularly compute-intensive workloads, long-running workloads, or systems that might sit in idle IO_WAIT states for long periods of time. The disadvantage of serverless is that the compute comes at a premium. However, you also get to realize 100% utilization rates of your serverless resources. Understanding this tradeoff is an important step in your infrastructure maturity.

To close out compute, I want to share a few interesting trends we’ve seen across various SaaS customer journeys. Legacy workloads are deployed on EC2, with few frills, but as the company incrementally modernizes its solutions they tend to move towards containerization and serverless. Interestingly, for very mature and well-understood workloads, customers will often move back from serverless solutions to containerized solutions because they’re able to gain significant margins based on their domain knowledge of the workloads. Some workloads go even further and take advantage of EC2 Spot Instances to realize better margins.

Overall, we believe AWS offers the broadest and most versatile set of compute options currently available in the cloud. We work with our customers to build an understanding of how those options scale and grow with their solutions.

Storage and Databases

Now that we’ve covered networking and compute the only remaining foundational component is your data layer. We can split the data layer into storage, OLTP, and OLAP workloads.

At the storage layer, your main service is Amazon S3. Far more than a simple blob storage system, S3 can often act as your data lake and analytics platform. For multi-tenant pools, applications can use their identity providers and IAM to provide granular access with S3 key prefixes. For additional isolation or security, you can create unique buckets for larger customers. Either way, you can use Amazon Athena to serve both real-time and business intelligence style queries for your end-users.

When it comes to OLTP solutions for your SaaS applications you must again consider the DevOps tax. While it is true you could run your databases directly on EC2 there is significant operational overhead to maintaining those systems. Amazon RDS trades compute and storage cost premiums for that DevOps tax. If your margins allow for it RDS can be a great way to accelerate development while minimizing ops. When you additionally consider unique database engines like Amazon Aurora, your applications can gain capabilities that differentiate you from your competitors, but again, at an additional cost.

At certain scales or for certain workloads SaaS applications can take advantage of NoSQL data stores like MongoDB or DocumentDB. When working with traditional SQL datastores you tend to encode significant logic into the schema of the tables. With NoSQL datastores, you bring that relational logic out of the data schema and into the application layer. For many applications, this tradeoff introduces minimal complexity but certain workloads still lend themselves better to relational models. At particularly large scales traditional SQL datastores simply can’t continue to add vertical capacity so you’re left with the decision of sharding (partitioning) your application or using a horizontally scalable OLTP system like DynamoDB or MongoDB. Some SaaS applications can leverage that partitioning as a product feature.

Serverless data stores like Amazon DynamoDB offer many of the same advantages that serverless compute offers. Serverless datastores don’t have fixed infrastructure costs. To run a SQL-powered data plane (excluding Aurora Serverless) you have to pay the underlying infrastructure costs regardless of usage. With DynamoDB you only pay for the stored data and per request. This model, again, scales linearly with your business. The disadvantage of DynamoDB, is increased application complexity, especially when working with single table designs.

Across the customers we’ve worked with we’ve seen petabyte-scale SQL clusters and DynamoDB tables. We’ve seen Postgres clusters and DynamoDB tables scale to hundreds of millions of requests per second. It is very likely a modern database can serve the traffic you need to support. Increasingly, customers often need multiple, purpose-built database solutions to run their products efficiently.

There are many things to consider when building your SaaS platform, and understanding the cost vs. efficiency tradeoffs as you’re defining your infrastructure, could seem daunting. With years of experience helping our customers architect and launch Modern SaaS products, we can help you navigate this decision tree and architect a SaaS platform that is best for both your business and your end-users.

Resources:

Modeling SaaS Tenant Profiles on AWS

Randall Hunt, Chief Technology Officer at Caylent, is a technology leader, investor, and hands-on-keyboard coder based in Los Angeles, CA. Previously, Randall led software and developer relations teams at Facebook, SpaceX, AWS, MongoDB, and NASA. Randall spends most of his time listening to customers, building demos, writing blog posts, and mentoring junior engineers. Python and C++ are his favorite programming languages, but he begrudgingly admits that Javascript rules the world. Outside of work, Randall loves to read science fiction, advise startups, travel, and ski.

View Randall's articles

Chatbots aren’t the only way to surface AI, and often, they’re the wrong choice. Discover intelligent interface patterns that drive AI adoption, build user trust, and deliver measurable ROI without forcing users to craft the perfect prompt.

Learn why 85% of AI projects fail and how strategic UX design drives trust, adoption, and measurable ROI.

Learn what becoming a SaaS company requires across product, architecture, go-to-market, support, and culture.