Caylent Services

Artificial Intelligence & MLOps

Apply artificial intelligence (AI) to your data to automate business processes and predict outcomes. Gain a competitive edge in your industry and make more informed decisions.

Learn about some of the biggest announcements and highlights from SVP, AWS Utility Computing, Peter DeSantis's keynote, at AWS re:Invent 2022.

As if re:Invent wasn’t already exciting enough, during the first night of re:Invent 2022, Peter Desantis (SVP of AWS Utility Computing) gave a great talk on the future of cloud computing while also revealing exciting progress on both the hardware and software fronts. DeSantis took us on a deep dive into what’s going on under the hood with the technologies that power a lot of the AWS services we love and use the most. He touched on the importance of balance and the difficulties of increasing performance without having to sacrifice cost and security. With many technical challenges to overcome, AWS was able to supercharge their hardware and software to power the next generation of technology that has yet to come.

DeSantis’s main focus was on performance. Great performance is the accumulated result of innovating from the ground up and investing over time, a lot of time making tweaks and improvements under the hood that are transparent to most. There are many solutions that can provide cheap wins in the performance category but they all come at a cost. Maybe the solution is less secure or slightly more expensive, but as DeSantis assured the crowd, “... at AWS, we never compromise on security and we’re laser-focused on cost”.

AWS's Nitro System completely reimagines virtualization infrastructure with a combination of dedicated hardware and a lightweight hypervisor. The complex details are what really separate AWS from their competitors. Let’s take a look at what’s going on under the hood.

Amazon uses a specially designed chip to build their Nitro controller which gives their EC2 instances a boost in performance. Every Amazon EC2 instance introduced since 2014 uses a Nitro controller which runs all AWS code they use to manage and secure EC2 instances. The Nitro controller delivers improved security, networking performance, storage performance, and ability to turn any server into a full EC2 instance. It also supports bare metal EC2 instances and helps devote all server resources to customer workloads by eliminating the virtualization overhead. Needless to say, AWS Nitro plays a huge part in powering many customer workloads all over the world.

The first reveal DeSantis presented was their new AWS Nitro V5 chip which powers their Nitro V5 controller. The V5 controller comes with 2x the transistors than their previous chip (2x the computational power), 50% faster DRAM (50% more memory bandwidth), 2x more CPI bandwidth, 60% higher packet per second rate, 30% lower latency, and 40% better performance. Those are some drastic improvements, and they did it all while reducing the overall power consumption!

With the introduction of the new Nitro V5 chip comes a new EC2 instance offering that will utilize the new chip; the new C7gn network-optimized EC2 instance.

Many different types of applications will benefit from the new V5 chip but HPC applications will benefit the most. HPC applications need more than faster networking; they also need a faster processor that’s catered to their complex workloads. HPC applications tend to lean heavily on floating point and vector math, and with HPC applications at the forefront of their mind, AWS looked at improving the latest version of the Graviton3 processor using data-driven decisions for performance enhancements.

Introducing the new Graviton3E processor, HPC applications will get a nice boost in overall performance against various benchmarks such as 35% higher performance in HPL (a computational benchmark for linear algebra), 12% better in Gromacs (a molecular modeling library), and a 30% boost in financial options modeling.

With a new Nitro chip and a purpose built processor for HPC workloads, we can see where DeSantis is going with this. After introducing the new Graviton3E processor, he jumped straight into yet another reveal; the new HPC7g EC2 instance for compute-intensive HPC workloads. The new HPC7g EC2 instance provides all of the networking capabilities of the Nitro V5 chip along with all of the benefits from the Graviton3E processor. HPC workloads just got that much easier.

As exciting as hardware improvements are, they only make up one dimension of the future of scaling and performance. Software improvements play a complementary role that allows us to take yet another step forward in performance. Regarding networking improvements, AWS developed their own networking protocol called Scalable Reliable Datagram (SRD) that, at a high level, allows lower latency and higher throughput than traditional networking protocols for their computing environments. AWS built their Elastic Fabric Adapter to enable the lowest latency and highest throughput on their network and it does so by leveraging SRD technology. You can also leverage SRD and EFA via libfabric/MPI (what most HPC code is used to) or the driver in AL2.AWS’s SRD technology is really interesting but a deep dive would likely warrant an entirely separate blog post so we’ll keep it high level for now.

For those unfamiliar with AWS’s Elastic Fabric Network Adapter, it is a high performance network interface for running HPC and machine learning applications at scale.

The SRD protocol provides several notable benefits over the traditional TCP protocol such as network multi-pathing and faster packet transmission retries. DeSantis compared SRD to the invention of the wheel for the AWS ecosystem; an invention that was built for a specific purpose (providing low latency networking for HPC applications) but turned out to be beneficial in many different areas.

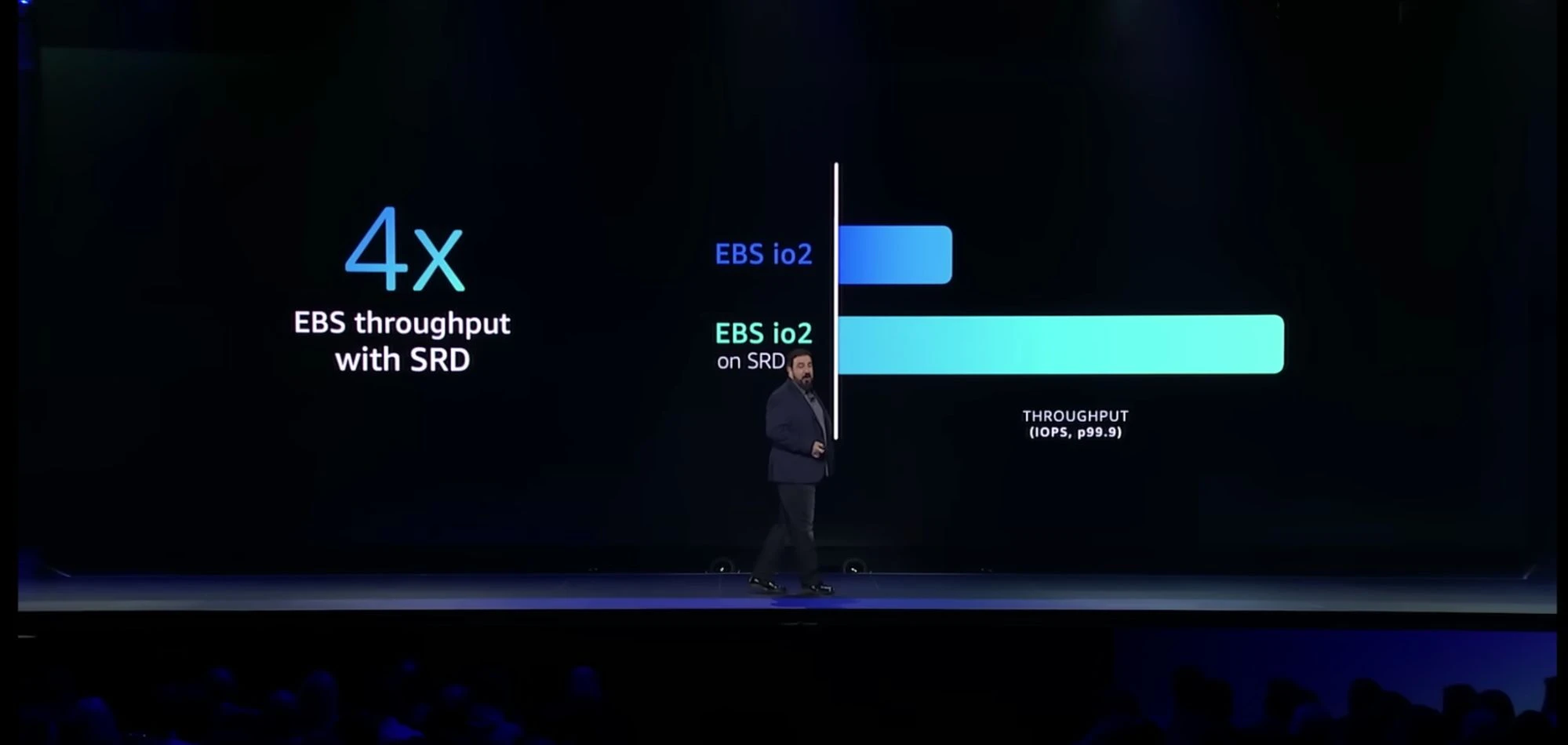

AWS wanted to bring more value to customers leveraging the benefits that SRD brings to the table, so they looked at the Amazon Elastic Block Store (EBS) service. For those unfamiliar with EBS, EBS provides block-level storage for EC2 instances and is used by all sorts of mission critical applications and I/O intensive databases.

EBS with SRD offers 4x more throughput than traditional EBS volumes and reduces tail latency that comes with multiple write operations for enhanced durability. DeSantis announced that all new EBS io2 volumes will now be running on SRD. Now you’ll get lower consistent latency and increased throughput at no additional cost to you. Now that’s a pretty huge improvement!

AWS didn’t want to stop there. With the desire to bring their SRD technology mainstream without the need to adopt EFA, they looked into how SRD could benefit their Elastic Network Adapter (ENA), which is their general-purpose networking interface for EC2. ENA makes use of the Nitro controller to offload work from the main EC2 server which allows customers to make more use of their EC2 instances.

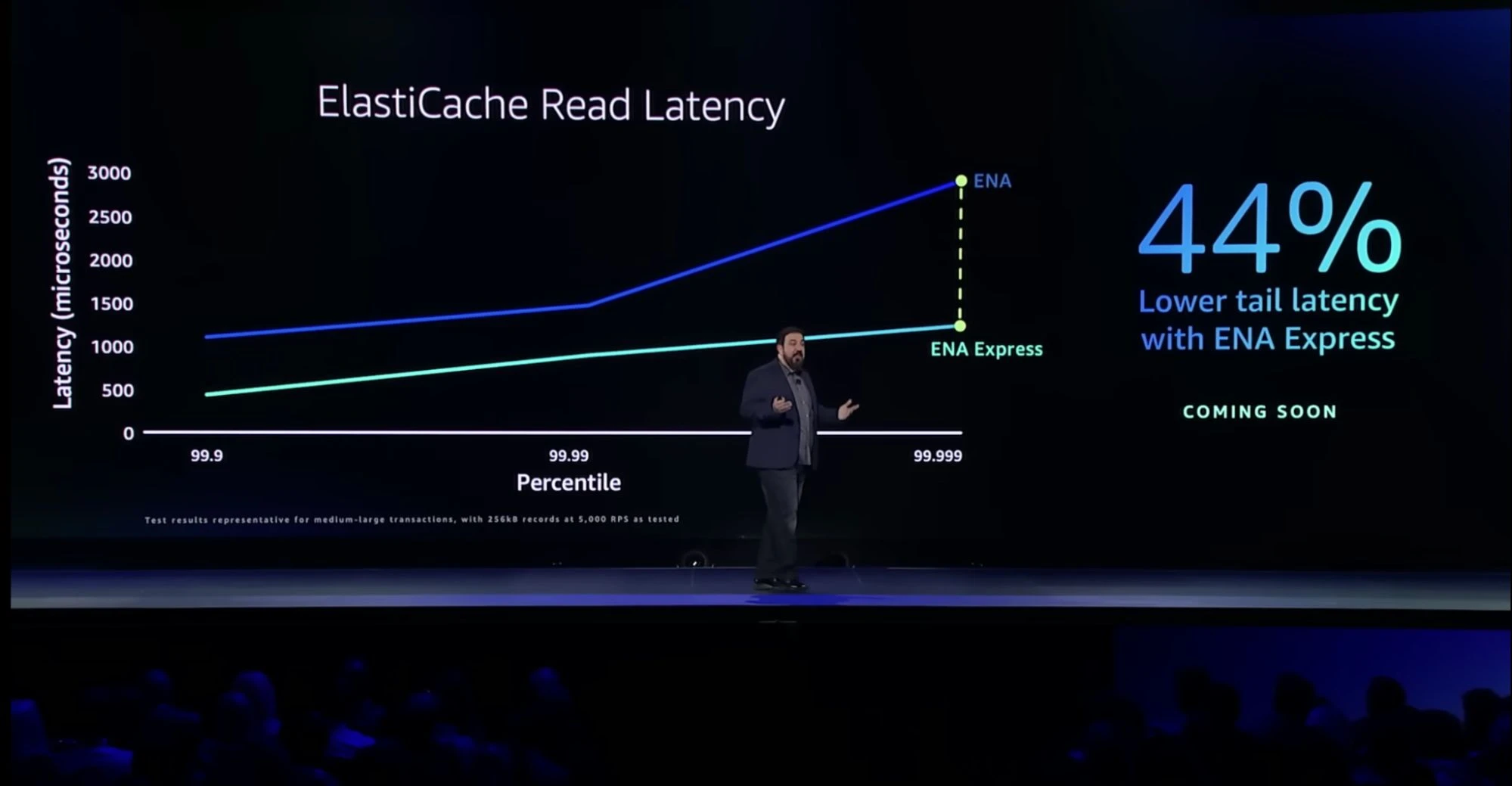

With that, AWS has created ENA Express, which brings their SRD technology to any networking protocol like TCP and UDP. Simply enable ENA Express on your ENA interface and like magic, you get increased throughput and lower latency from your network adapter. ENA Express brings huge improvements to the performance of any network-based service such as ElastiCache.

Since ENA Express takes advantage of SRD’s multi-pathing capabilities, ENA Express can enhance single-flow TCP connections from 5 GB/s to 25 GB/s, a 500% increase in throughput. All of these benefits come with zero code changes within your application code. How much more convenient could it get?

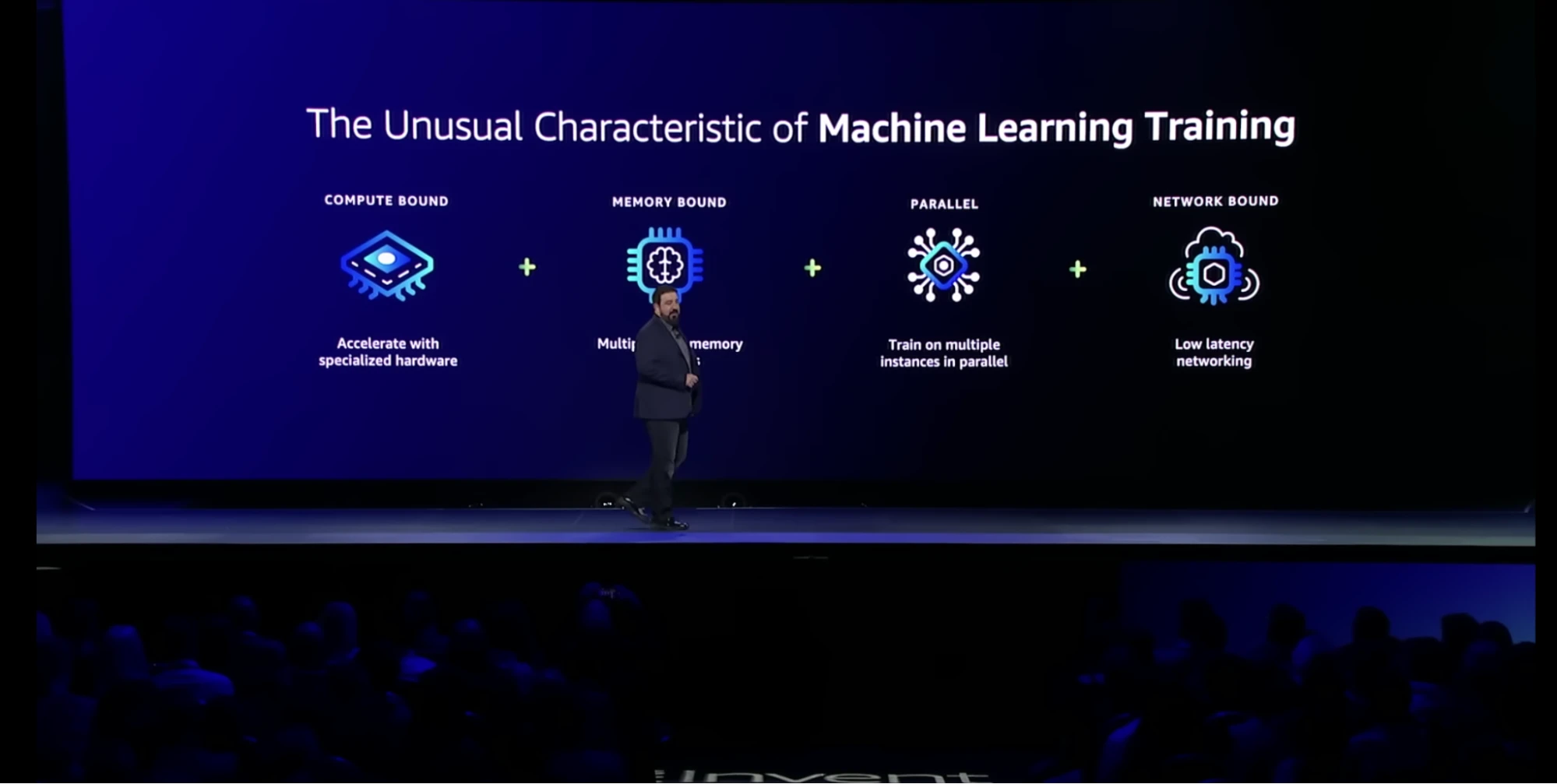

When you think about a performance engineering problem, you typically try to identify one dimension that’s holding you back but in the world of training machine learning (ML) models, there are many different dimensions that could potentially be a bottleneck. Ultimately, the goal of reaching convergence in the fastest and most cost-effective way has always stayed constant. Convergence is when the machine learning model no longer benefits from additional training, or in other words, reaches its peak form.

Each iteration of the model training process takes a lot of computational power so there’s a need for specialized hardware to minimize the cost of reaching convergence. The purpose-built ML hardware called the Trn1 instance has 16 optimized trainium processors, 512 GB of memory per instance, 800 GB/s network bandwidth and uses EFA and SRD. Needless to say, the Trn1 was AWS’s most cost-effective and high-performance deep learning instance available… until now!

With the ever-increasing size and complexity of machine learning models, AWS partnered with PyTorch to create a new ring-of-rings hierarchical machine learning algorithm which allows up to 75% faster synchronization between the sets of instances that are conducting the training.

If you want to get the whole deep dive of the ring-of-rings algorithm, here’s a link to DeSantis’s keynote speech where he discusses this architecture.

In addition to the ring-of-rings algorithmic improvements to deep learning training, AWS introduced a new and improved version of their old Trn1 instance called the Trn1n instance.

The Trn1n EC2 instance class is a network-optimized version of the Trn1 class with 1.6 TB/s EFA networking throughput which allows for even faster ML training for ultra-large models. Talk about speed!

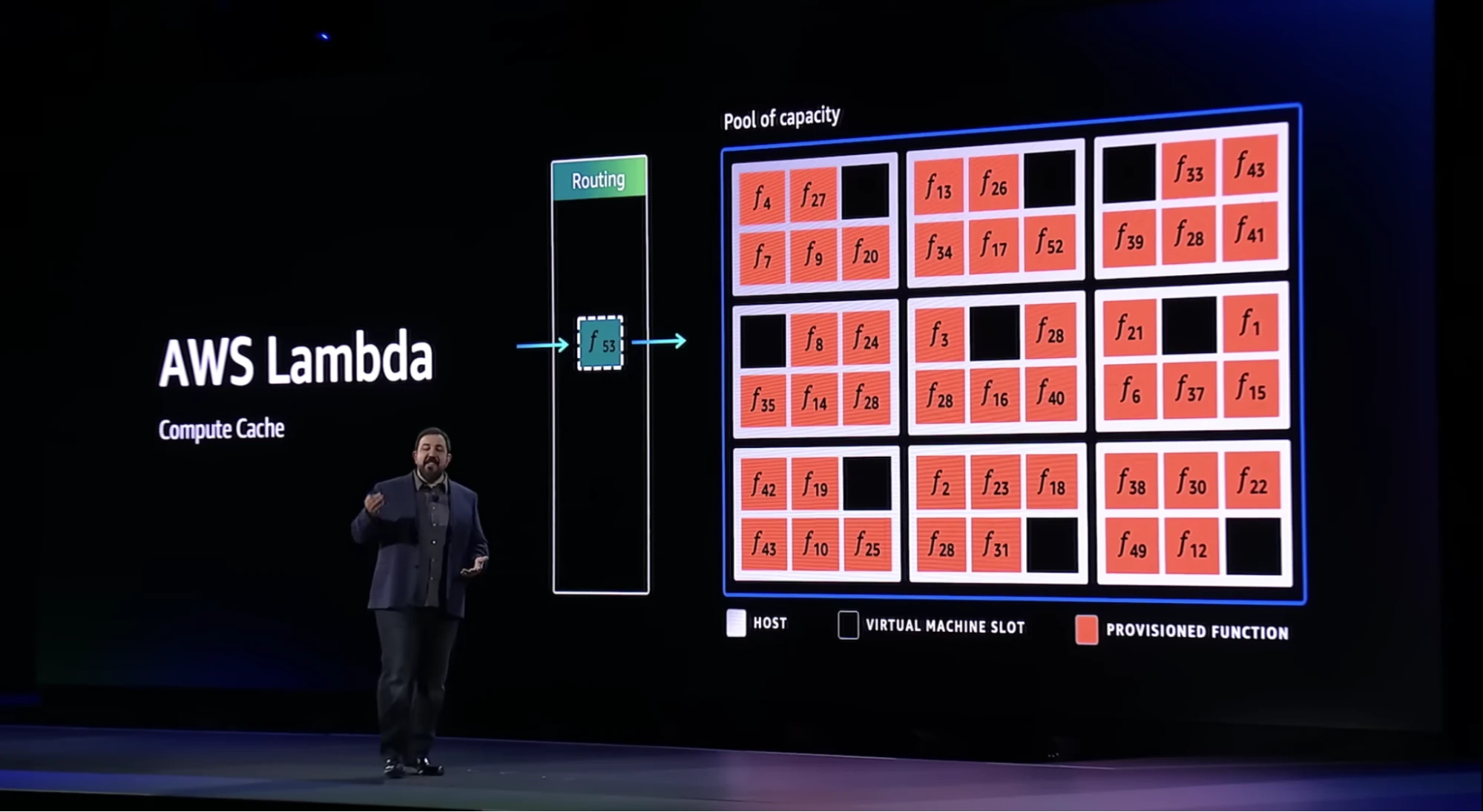

AWS’s deep investment in performance is happening across all services at every level of the stack. AWS Lambda, their first serverless computing service, has come a long way since it first launched in 2014. With over 1 million active customers and 10 trillion requests per month, it’s undeniably changing the way applications are being built today.

In a nutshell, AWS Lambda acts as a compute cache, not a memory cache, where the items being cached aren’t datum, but units of compute. Each slot within the cache is a virtual machine ready to run a customer’s function. In the early days of Lambda, the EC2 T2 micro instances were what ran customer Lambda functions under the hood. Whenever a cache miss happens, meaning the lambda function is not loaded into a runtime environment on one of the T2 instances, the function incurs an additional cost called a cold start (tail latency).

Lambda functions work best when there’s a steady stream of usage but not as well when there are spikes in traffic that require new capacity to be provisioned. In order to minimize cold starts and deliver more predictable capacity, Lambda started leveraging Firecracker; a purpose-built virtualization technology that delivers secure and fast microVMs for serverless computing. These microVMs are able to launch much faster than the traditional T2 micro instances and also allow the lambda cache to become more efficient without actually increasing the size of the cache. With the bootup time decreasing from seconds (T2) to fractions of a second (microVMs), AWS was able to decrease Lambda cold start times by 50%.

Decreasing the tail latency of Lambda by 50% is nice and all, but why not almost entirely get rid of it? Well, they basically did! With the addition of Lambda SnapStart, cold starts are now nearly indistinguishable compared to a Lambda cache hit. Lambda SnapStart takes a snapshot of the entire initialization steps during the life cycle of a cold start and uses the snapshot when the lambda function has to boot up a new microVM. Cold start times have decreased 90% with SnapStart and it all comes at no additional cost to customers and no extra configurations.

If you want to learn about the technical innovations regarding Lambda SnapStart, you can find the topic here.

The innovations AWS has made in both the hardware and software fronts are exciting to say the least. The hardware improvements with the new Nitro V5 chip will enable them to continue to supercharge any services that leverage their underlying hardware and the software improvements will only continue to make their services faster and more reliable. With customers at the forefront of their data-driven decision making, everyday users will continue to see improvements within the services that power their applications. We’re ecstatic to see what they reveal at re:Invent 2023!

Caylent Services

Apply artificial intelligence (AI) to your data to automate business processes and predict outcomes. Gain a competitive edge in your industry and make more informed decisions.

Caylent Catalysts™

Evolve beyond clock speed and core count comparisons and realize real world performance for modern cloud workloads.

Caylent Catalysts™

Design new cloud native applications by providing secure, reliable and scalable development foundation and pathway to a minimum viable product (MVP).

Caylent Services

Quickly establish an AWS presence that meets technical security framework guidance by establishing automated guardrails that ensure your environments remain compliant.

In Werner Vogels’ 2025 AWS re:Invent keynote, he reminded us that as technology continues to evolve, developers must evolve too. Discover how engineers are becoming “renaissance developers” and why ownership matters more than ever.

Learn about all of the exciting and innovative announcements unveiled during Peter DeSantis and Dave Brown's AWS re:Invent 2025 keynote.

Learn about all of the AI announcements unveiled at AWS re:Invent 2025, including the Amazon Nova 2 family, updates to Amazon Bedrock AgentCore, Kiro autonomous agents, Amazon S3 Vectors, and more.