Caylent Catalysts™

Generative AI Knowledge Base

Learn how to improve customer experience and with custom chatbots powered by generative AI.

Using Anthropic Claude and Amazon Bedrock to unlock better interactions for customers.

Within a chatbot application framework, predefined intents streamline user interactions by offering a selection of options. Chatbot intent refers to the objective or aim that a user has when interacting with a customer service chatbot during a conversation. These intents are subsequently processed through established logic to fulfill user requests. Chatbots can now leverage Large Language Model (LLM) agents to efficiently partition tasks into manageable units to ultimately fulfill user objectives. In AWS one may use Amazon Lex as a traditional chatbot solution and combine it with an LLM agent to fulfill complicated user requests.

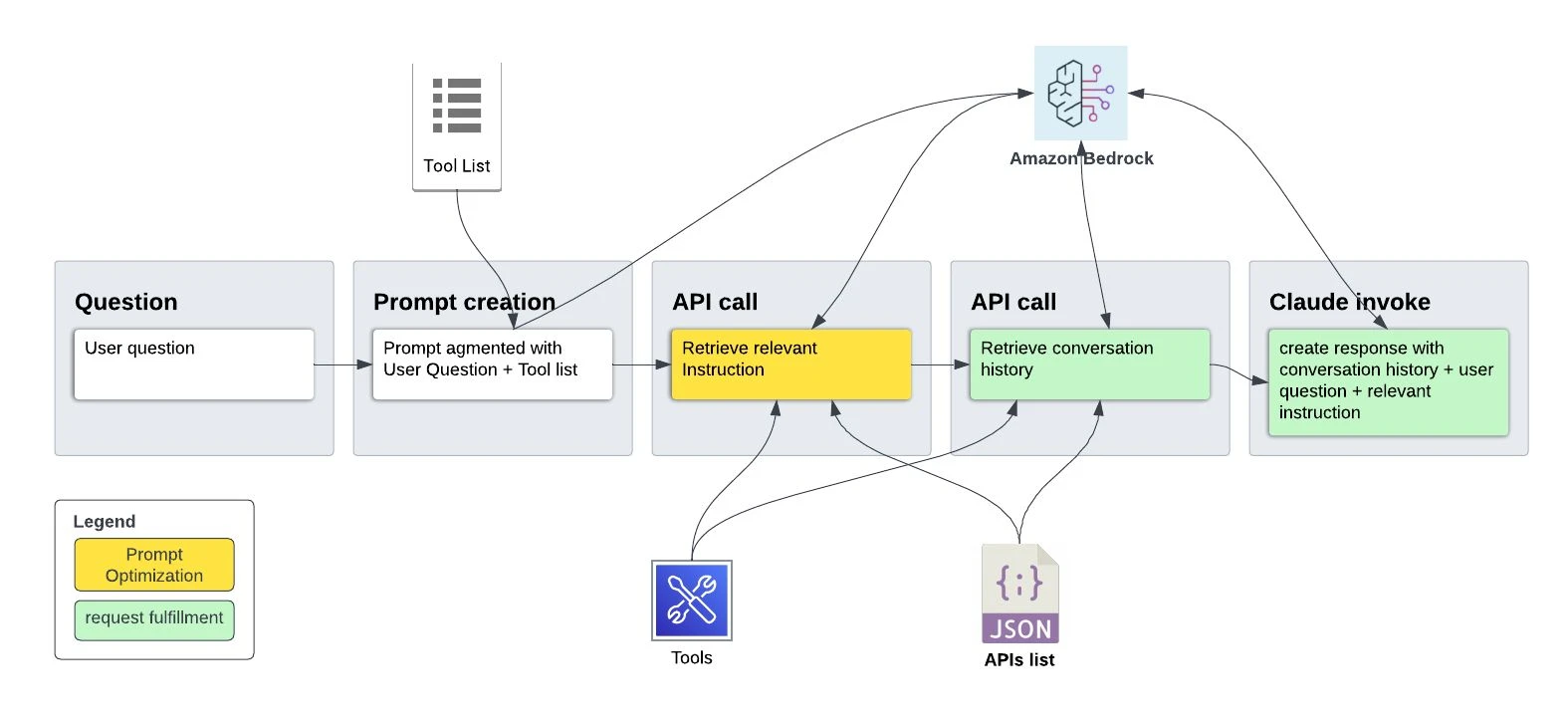

In today's post I propose an innovative approach leveraging LLMs not only for request fulfillment but also for user intent identification for prompt optimization using a) Anthropic Claude’s function calling capability via Amazon Bedrock and b) Bedrock Agent using Claude as the LLM.

Consider a use case where the end users of your chatbot are not familiar with prompt engineering best practices and they may interact with the chatbot with only short and simple questions but you want to make sure they still get proper results with all the information and with correct formatting according to their specific requirements. And this might be different from question to question. Moreover, for some of the questions you may need to provide a few-shot examples to make sure the correct response is created by LLM. Therefore we need to ensure the appropriate prompt template is selected or augmented with relevant instruction depending on the user question.

In the below image I have elaborated desired end to end steps that LLM should take to fulfill the user question appropriately which include intent resolution and prompt optimization step.

For the dataset I am using the topical chat dataset that contains conversations between pairs of agents. Specifically I used the “train.json” file and kept the conversation id and the conversations only.

"t_bde29ce2-4153-4056-9eb7-f4ad710505fe": {

"content": [

{

"message": "Are you a fan of Google or Microsoft?",

"agent": "agent_1"

},

{

"message": "Both are excellent technology they are helpful in many ways. For the security purpose both are super.",

"agent": "agent_2"

},

{

"message": "I'm not a huge fan of Google, but I use it a lot because I have to. I think they are a monopoly in some sense. ",

"agent": "agent_1"

},

//// TRUNCATED CONVERSATION ////

{

"message": "I heard that too. Well, it was nice chatting with you. Have a good day. ",

"agent": "agent_1"

}

]

}There are 3 main components required here. These include:

First we need to create all the essential functions necessary for Claude to address user inquiries effectively. Notably, functions such as `get_summary_instruction()`, `get_sentiment_instruction()`, and `get_general_instruction()` play a crucial role in directing user queries towards pertinent instructions tailored for the LLM. It is imperative to observe the inclusion of XML tags, as they are requisite within the Claude framework. Please take a moment to familiarize yourself with the provided code. Each function has a description that helps LLM to decide which one to use based on the user question.

get_summary_instruction_description = """

<tool_description>

<tool_name>get_summary_instruction</tool_name>

<description> It does not take any input parameter and returns instruction for conversation summary analysis question</description>

</tool_description>

"""

get_sentiment_instruction_description = """

<tool_description>

<tool_name>get_sentiment_instruction</tool_name>

<description>

It does not take any input parameter and returns instruction for conversation sentiment analysis question</description>

</tool_description>

"""

get_general_instruction_description = """

<tool_description>

<tool_name>get_general_instruction</tool_name>

<description> It does not take any input parameter and returns instruction for a general question where it is not about summary or sentiment</description>

</tool_description>

"""

In this particular example I am specifying three instructions. The first one is to answer questions about summarizing the conversation, the next will specify the instruction to retrieve the sentiment of the conversation and the last one is for any other question. In other words the chatbot is optimized to answer questions about conversation summary and sentiment while it can still be used for any other question. Below you can review the detailed instructions for each scenario. You will need to add any specific instruction (ex. formatting, step by step guide, few-shot examples, …) here to be picked up by the LLM accordingly when answering the user question.

## Provide the instruction for summary question to be passed to the prompt

def get_summary_instruction():

instruction = "Carefully consider a walkthrough of all the messages in the provided conversion history. Present the list of topics in the conversation in numbered bullet points. Using this, answer the question."

return {"instruction": instruction}

## Provide the instruction for sentiment question to be passed to the prompt

def get_sentiment_instruction():

instruction = "Pay attention to the provided conversation history while answering the questions. Make sure you have sufficient data to back your reasoning when answering the question. The identified sentiment must be taken from these following categories: Angry, Fearful, Happy, Neutral, Sad, Disgusted, Surprised, Curious to dive deeper. A conversation may contain a mix of sentiment from the categories. Also make sure to provide reasoning behind your decision in bullet points"

return {"instruction": instruction}

## Provide the instruction for general question to be passed to the prompt

def get_general_instruction():

instruction = "Carefully consider all the messages in the provided conversion history. Using this, answer the question."

return {"instruction": instruction}The last tool here is to retrieve the conversation history based on the conversation id passed in the user question. In Particular, it reads the conversation history from a JSON file on the disk and returns it along with the question and the selected instruction.

get_conversation_description = """

<tool_description>

<tool_name>get_conversation</tool_name>

<description>

receive instruction from either get_sentiment_instruction_description, get_summary_instruction_description or get_general_instruction_description function and return instruction, user question and 1 JSON object containing the conversation history for the given conversation id.</description>

<parameters>

<parameter>

<name>id</name>

<type>string</type>

<description>conversation id</description>

</parameter>

<parameter>

<name>question</name>

<type>string</type>

<description>user question</description>

</parameter>

<parameter>

<name>instruction</name>

<type>string</type>

<description>instruction</description>

</parameter>

</parameters>

</tool_description>

"""

def get_conversation(id: str, question: str, instruction: str):

with open('train.json', 'r') as f:

data = json.load(f)

return {"conversation": data[id]['content'], "question": question, "instruction": instruction}

The final step requires listing all the tools available to LLM.

list_of_tools_specs = [

get_general_instruction_description,

get_summary_instruction_description,

get_sentiment_instruction_description,

get_conversation_description

]Having established the requisite tools, this section will outline the prompt template for the function calling purpose. The prompt template consists of four primary sections, commencing with the definition of the system role, followed by the Claude-required function template and an exhaustive list of available tools. Crucially, the subsequent instructions direct Claude to initially identify the relevant instruction from the available options before proceeding with additional function calls. This approach ensures the prompt is augmented with any necessary instructions, thus facilitating the desired response.

def create_prompt(tools_string, user_input):

prompt_template = f"""

\n\nHuman:

You are an expert AI assistant that has been equipped with the following function(s) to help you answer user questions with information about a given conversation history. Your goal is to answer the user's question to the best of your ability, using the function(s) to gather more information if necessary to better answer the question. The result of a function call will be added to the conversation history as an observation.

In this environment you have access to a set of tools you can use to answer the user's question.

You may call them like this. Only invoke one function at a time and wait for the results before invoking another function:

<function_calls>

<invoke>

<tool_name>$TOOL_NAME</tool_name>

<parameters>

<$PARAMETER_NAME>$PARAMETER_VALUE</$PARAMETER_NAME>

...

</parameters>

</invoke>

</function_calls>

Here are the tools available:

<tools>

{tools_string}

</tools>

first find the appropriate instruction from the get_general_instruction_description, get_sentiment_instruction_description or get_summary_instruction_description before running another function.

if the question is not about the sentiment or summary of the conversation then use instruction provided by get_general_instruction_description.

{user_input}

\n\nAssistant:

"""

return prompt_templateNow we have everything needed to test our solution. I have asked 3 questions about conversation summary, conversation sentiment and one general question which is not about sentiment or summary of the conversation. Let's try them and note the end to end process.

Question about summary of the conversation:

Here I ask the following question from Claude: “Can you summarize the conversion with id t_bde29ce2-4153-4056-9eb7-f4ad710505fe?”

And here are the run logs:

Human:

You are an expert AI assistant that has been equipped with the following function(s) to help you answer user questions with information about a given conversation history. Your goal is to answer the user's question to the best of your ability, using the function(s) to gather more information if necessary to better answer the question. The result of a function call will be added to the conversation history as an observation.

In this environment you have access to a set of tools you can use to answer the user's question.

You may call them like this. Only invoke one function at a time and wait for the results before invoking another function:

<function_calls>

<invoke>

<tool_name>$TOOL_NAME</tool_name>

<parameters>

<$PARAMETER_NAME>$PARAMETER_VALUE</$PARAMETER_NAME>

...

</parameters>

</invoke>

</function_calls>

Here are the tools available:

<tools>

<tool_description>

<tool_name>get_general_instruction</tool_name>

<description> It does not take any input parameter and returns instruction for a general question where it is not about summary or sentiment</description>

</tool_description>

<tool_description>

<tool_name>get_summary_instruction</tool_name>

<description> It does not take any input parameter and returns instruction for conversation summary analysis question</description>

</tool_description>

<tool_description>

<tool_name>get_sentiment_instruction</tool_name>

<description>

It does not take any input parameter and returns instruction for conversation sentiment analysis question</description>

</tool_description>

<tool_description>

<tool_name>get_conversation</tool_name>

<description>

receive instruction from either get_sentiment_instruction_description or get_summary_instruction_description function and return instruction, user question and 1 JSON object containing the conversation history for the given conversation id.</description>

<parameters>

<parameter>

<name>id</name>

<type>string</type>

<description>conversation id</description>

</parameter>

<parameter>

<name>question</name>

<type>string</type>

<description>user question</description>

</parameter>

<parameter>

<name>instruction</name>

<type>string</type>

<description>instruction</description>

</parameter>

</parameters>

</tool_description>

</tools>

first find the appropriate instruction from the get_general_instruction_description, get_sentiment_instruction_description or get_summary_instruction_description before running other function.

if the question is not about the sentiment or summary of the conversation then use instruction provided by get_general_instruction_description.

Can you summarize the conversion with id t_bde29ce2-4153-4056-9eb7-f4ad710505fe?

Assistant:

<function_calls>

<invoke>

<tool_name>get_summary_instruction</tool_name>

<parameters>

</parameters>

</invoke>

</function_calls>

<function_results>

<result>

<tool_name>get_summary_instruction</tool_name>

<stdout>

{'instruction': 'Carefully consider a walkthrough of all the messages in the provided conversion history. Present the list of topics in the conversation in numbered bullet points. Using this, answer the question.'}

</stdout>

</result>

</function_results>

To summarize the conversation, I will first gather the conversation details by calling the get_conversation function:

<function_calls>

<invoke>

<tool_name>get_conversation</tool_name>

<parameters>

<id>t_bde29ce2-4153-4056-9eb7-f4ad710505fe</id>

<question>Can you summarize the conversion with id t_bde29ce2-4153-4056-9eb7-f4ad710505fe?</question>

<instruction>{'instruction': 'Carefully consider a walkthrough of all the messages in the provided conversion history. Present the list of topics in the conversation in numbered bullet points. Using this, answer the question.'}</instruction>

</parameters>

</invoke>

</function_calls>

<function_results>

<result>

<tool_name>get_conversation</tool_name>

<stdout>

{'conversation': [{'message': 'Are you a fan of Google or Microsoft?', 'agent': 'agent_1'}, {'message': 'Both are excellent technology they are helpful in many ways. For the security purpose both are super.', 'agent': 'agent_2'},—----- TRUNCATED CONVERSATION HISTORY —---- {'message': 'I heard that too. Well, it was nice chatting with you. Have a good day. ', 'agent': 'agent_1'}], 'question': 'Can you summarize the conversion with id t_bde29ce2-4153-4056-9eb7-f4ad710505fe?', 'instruction': "{'instruction': 'Carefully consider a walkthrough of all the messages in the provided conversion history. Present the list of topics in the conversation in numbered bullet points. Using this, answer the question.'}"}

</stdout>

</result>

</function_results>

Based on the conversation history, here is a summary of the key topics discussed:

1. Opinions on Google and Microsoft - Both agents shared their thoughts on these tech companies.

2. Google's products and services - This included search, ads, cloud computing, Chrome browser, YouTube, etc.

3. Interesting Google facts - Like using goats to cut grass.

4. Fish and sea creatures - Including seahorses, jellyfish, dolphins.

5. Cats - Agent 1 mentioned liking dogs more. Discussed cats sleeping a lot.

6. Cat hearing abilities.

So in summary, the agents discussed various tech companies, Google's offerings, some fun trivia, and also talked about their thoughts on fish, seahorses, jellyfish, dolphins and cats.We can confirm that the final response has correctly picked up our instruction to summarize the topics discussed in the conversation and they are presented in a numbered list.

Question about sentiment of the conversation:

Here I ask the following question from Claude: “What is the sentiment of the conversation with id t_bde29ce2-4153-4056-9eb7-f4ad710505fe?”

And here are the truncated run logs:

—----- TRUNCATED LOG —----

Assistant:

<function_calls>

<invoke>

<tool_name>get_sentiment_instruction</tool_name>

<parameters>

</parameters>

</invoke>

</function_calls>

<function_results>

<result>

<tool_name>get_sentiment_instruction</tool_name>

<stdout>

{'instruction': 'Pay attention to the provided conversation history while answering the questions. Make sure you have sufficient data to back your reasoning when answering the question. The identified sentiment must be taken from these following categories: Angry, Fearful, Happy, Neutral, Sad, Disgusted, Surprised, Curious to dive deeper. A conversation may contain a mix of sentiment from the categories. Also make sure to provide reasoning behind your decision in bullet points'}

</stdout>

</result>

</function_results>

<function_calls>

<invoke>

<tool_name>get_conversation</tool_name>

<parameters>

<id>t_bde29ce2-4153-4056-9eb7-f4ad710505fe</id>

<question>what is the sentiment of the conversation with id t_bde29ce2-4153-4056-9eb7-f4ad710505fe?</question>

<instruction>{'instruction': 'Pay attention to the provided conversation history while answering the questions. Make sure you have sufficient data to back your reasoning when answering the question. The identified sentiment must be taken from these following categories: Angry, Fearful, Happy, Neutral, Sad, Disgusted, Surprised, Curious to dive deeper. A conversation may contain a mix of sentiment from the categories. Also make sure to provide reasoning behind your decision in bullet points'}</instruction>

</parameters>

</invoke>

</function_calls>

<function_results>

<result>

<tool_name>get_conversation</tool_name>

<stdout>

{'conversation': [{'message': 'Are you a fan of Google or Microsoft?', 'agent': 'agent_1'}, {'message': 'Both are excellent technology they are helpful in many ways. For the security purpose both are super.', 'agent': 'agent_2'}, —----- TRUNCATED CONVERSATION HISTORY —---- {'message': 'I heard that too. Well, it was nice chatting with you. Have a good day. ', 'agent': 'agent_1'}], 'question': 'what is the sentiment of the conversation with id t_bde29ce2-4153-4056-9eb7-f4ad710505fe?', 'instruction': "{'instruction': 'Pay attention to the provided conversation history while answering the questions. Make sure you have sufficient data to back your reasoning when answering the question. The identified sentiment must be taken from these follwoing categories: Angry, Fearful, Happy, Neutral, Sad, Disgusted, Surprised, Curious to dive deeper. A conversation may contain mix of sentiment from the categories. Also make sure to provided reasoning behind your decision in bullet points'}"}

</stdout>

</result>

</function_results>

Based on the conversation history, the sentiments expressed include:

- Happy: The conversation starts out curious and friendly, with the agents discussing technology companies like Google in a positive way. There is laughter and lighthearted banter about goats and cats. This indicates happiness and amusement.

- Neutral: Much of the conversation is also neutral in tone, with the agents exchanging factual information about technology, fish, cats, etc. There is no strong sentiment attached to these factual statements.

- Curious: Both agents seem curious to learn more, asking each other questions about various topics. This shows an interest to dive deeper into the subjects.

So in summary, the predominant sentiments expressed in this conversation are happy, neutral and curious. There is a sense of amusement, along with genuine curiosity to learn more about each other's interests. The tone remains friendly and positive overall.As you can see the response incorporated our instruction to summarize sentiment of the conversation in the predefined categories.

General question about the conversation:

Here I ask the following question from Claude that is not about the sentiment or the summarization of the conversation. “how many turns of conversation exists in conversation with id t_bde29ce2-4153-4056-9eb7-f4ad710505fe?” This showcases the scenario where LLM has correctly used the general instruction given the question is neither about “conversation summary” nor “conversation sentiment”.

And here are the run logs:

—----- TRUNCATED LOG —----

Assistant:

<function_calls>

<invoke>

<tool_name>get_general_instruction</tool_name>

<parameters>

</parameters>

</invoke>

</function_calls>

<function_results>

<result>

<tool_name>get_general_instruction</tool_name>

<stdout>

{'instruction': 'Carefully consider all the messages in the provided conversion history. Using this, answer the question.'}

</stdout>

</result>

</function_results>

To answer the question about the number of turns in the conversation, I will call the get_conversation function to retrieve the conversation history:

<function_calls>

<invoke>

<tool_name>get_conversation</tool_name>

<parameters>

<id>t_bde29ce2-4153-4056-9eb7-f4ad710505fe</id>

<question>how many turns of conversation exists in conversation with id t_bde29ce2-4153-4056-9eb7-f4ad710505fe?</question>

<instruction>{'instruction': 'Carefully consider all the messages in the provided conversion history. Using this, answer the question.'}</instruction>

</parameters>

</invoke>

</function_calls>

<function_results>

<result>

<tool_name>get_conversation</tool_name>

<stdout>

{'conversation': [{'message': 'Are you a fan of Google or Microsoft?', 'agent': 'agent_1'}, {'message': 'Both are excellent technology they are helpful in many ways. For the security purpose both are super.', 'agent': 'agent_2'}, —----- TRUNCATED CONVERSATION HISTORY —---- {'message': 'I heard that too. Well, it was nice chatting with you. Have a good day. ', 'agent': 'agent_1'}], 'question': 'how many turns of conversation exists in conversation with id t_bde29ce2-4153-4056-9eb7-f4ad710505fe?', 'instruction': "{'instruction': 'Carefully consider all the messages in the provided conversion history. Using this, answer the question.'}"}

</stdout>

</result>

</function_results>

Based on the conversation history, there are 29 messages between the two agents. Since each message represents a turn in the conversation, there are 29 turns of conversation in this conversation with id t_bde29ce2-4153-4056-9eb7-f4ad710505fe.

Therefore, the answer is 29 turns of conversation.Now let's see how we can achieve the same thing using a Bedrock agent. There are 3 main components required here. This includes:

Let’s take a quick look at the tools I defined for our agent. Note the function docstring where I provided the goal of each tool which will help our agent to decide which one to use to solve a problem.

import json

import boto3

def summary_gen_tool():

"""

Use this tool only to return instruction for conversation summary analysis questions before calling get_conversation() tool. The input is the customer's question.

"""

instruction = "Carefully consider a walkthrough of all the messages in the provided conversion history. Present the list of topics in the conversation in numbered bullet points. Using this, answer the question."

return instruction

def sentiment_gen_tool():

"""

Use this tool only to return instruction for conversation sentiment analysis questions before calling get_conversation() tool. The input is the customer's question.

"""

instruction = "Pay attention to the provided conversation history while answering the questions. Make sure you have sufficient data to back your reasoning when answering the question. The identified sentiment must be taken from these following categories: Angry, Fearful, Happy, Neutral, Sad, Disgusted, Surprised, Curious to dive deeper. A conversation may contain a mix of sentiment from the categories. Also make sure to provide reasoning behind your decision in bullet points"

return instruction

def other_question_gen_tool():

"""

Use this tool only to return instruction for a general question where it is not about summary or sentiment before calling get_conversation() tool. The input is the customer's question.

"""

instruction = "Carefully consider all the messages in the provided conversion history. Using this, answer the question."

return instruction

def get_conversation_tool(id, question, instruction):

"""

Use this tool to receive instruction from either summary_gen_tool or sentiment_gen_tool or general_gen_tool function and return instruction, user question and 1 JSON object containing the conversation history for the given conversation id.

"""

s3_bucket = 'YOUR-BUCKET'

file_key = 'train.json'

s3 = boto3.client('s3')

response = s3.get_object(Bucket=s3_bucket, Key=file_key)

f = response['Body'].read().decode('utf-8')

data = json.loads(f)

conversation_history = data[id]['content']

return {"conversation": conversation_history, "question": question, "instruction": instruction}Now we define the APIs to invoke by agent in the following JSON file following OpenAPI schema. You will need to upload this file to an S3 bucket and point to it when defining the agent. As before we have 3 APIs for the instruction specific to the user question namely summary, sentiment or any other question. And last API is to pass the user question, selected instruction by LLM and the conversation history to LLM to make the final response. Notably in each path you need to provide the description, input parameters and the output. Below the schema for the APIs is listed.

{

"openapi": "3.0.0",

"info": {

"title": "Agent Assistant API",

"version": "1.0.0",

"description": "APIs helping users with creating summarization or sentiment or general answer to the user question."

},

"paths": {

"/get_summary_instruction": {

"get": {

"summary": "Retrieve instruction for conversation summary analysis question",

"description": "It does not take any input parameter and returns instruction for questions asked specifically about summarizing the conversation",

"operationId": "querySummaryInstruction",

"responses": {

"200": {

"description": "Retrieve instruction for conversation summary analysis question",

"content": {

"application/json": {

"schema": {

"type": "object",

"properties": {

"instruction": {

"type": "string",

"description": "The instruction for the user question"

}

}

}

}

}

}

}

}

},

"/get_sentiment_instruction": {

"get": {

"summary": "Retrieve instruction for conversation sentiment analysis question",

"description": "It does not take any input parameter and returns instruction for question ask specifically about sentiment of conversation",

"operationId": "querySentimentInstruction",

"responses": {

"200": {

"description": "Retrieve instruction for conversation sentiment analysis question",

"content": {

"application/json": {

"schema": {

"type": "object",

"properties": {

"instruction": {

"type": "string",

"description": "The instruction for the user question"

}

}

}

}

}

}

}

}

},

"/get_other_question_instruction": {

"get": {

"summary": "Retrieve instruction for a general question not about sentiment or summary of the conversation",

"description": "It does not take any input parameter and returns instruction for a general question where it is not about summary or sentiment",

"operationId": "queryGeneralInstruction",

"responses": {

"200": {

"description": "Retrieve instruction for a general question not about sentiment or summary of the conversation",

"content": {

"application/json": {

"schema": {

"type": "object",

"properties": {

"instruction": {

"type": "string",

"description": "The instruction for the user question"

}

}

}

}

}

}

}

}

},

"/gen_conversation": {

"get": {

"summary": "Retrieve conversation history given conversation id",

"description": "Retrieve conversation history for the conversation id passed in the user question",

"operationId": "genConversation",

"parameters": [

{

"name": "ConversationId",

"in": "path",

"description": "Conversation id",

"required": true,

"schema": {

"type": "string"

}

},

{

"name": "question",

"in": "path",

"description": "User question",

"required": true,

"schema": {

"type": "string"

}

},

{

"name": "instruction",

"in": "path",

"description": "instruction for answering the question",

"required": true,

"schema": {

"type": "string"

}

}

],

"responses": {

"200": {

"description": "conversation history",

"content": {

"application/json": {

"schema": {

"type": "object",

"properties": {

"conversation": {

"type": "string",

"description": "conversation history"

},

"question": {

"type": "string",

"description": "user question"

},

"instruction": {

"type": "string",

"description": "instruction for answering the question "

}

}

}

}

}

}

}

}

}

}

}Finally I have implemented the Lambda function that receives the event from the Agent and invokes relevant tools using the APIs we defined earlier.

import tools

import json

def lambda_handler(event, context):

# Print the received event to the logs

print("Received event: ")

print(event)

# Initialize response code to None

response_code = None

# Extract the action group, api path, and parameters from the prediction

action = event["actionGroup"]

api_path = event["apiPath"]

inputText = event["inputText"]

httpMethod = event["httpMethod"]

# Check the api path to determine which tool function to call

if api_path == "/get_summary_instruction":

# Call the summary_gen_tool from the tools module

body = tools.summary_gen_tool()

# Create a response body with the result

response_code = 200

elif api_path == "/get_sentiment_instruction":

# Call the sentiment_gen_tool from the tools module

body = tools.sentiment_gen_tool()

# Create a response body with the result

response_code = 200

elif api_path == "/get_other_question_instruction":

# Call the general_gen_tool from the tools module

body = tools.other_question_gen_tool()

# Create a response body with the result

response_code = 200

elif api_path == "/gen_conversation":

parameters = event['parameters']

for parameter in parameters:

if parameter["name"] == "ConversationId":

id_ = parameter["value"]

if parameter["name"] == "question":

question = parameter["value"]

if parameter["name"] == "instruction":

instruction = parameter["value"]

# Call the get_conversation_tool from the tools module with the query

body = tools.get_conversation_tool(id_, question, instruction)

# Create a response body with the result

response_code = 200

else:

# If the api path is not recognized, return an error message

body = {"{}::{} is not a valid api, try another one.".format(action, api_path)}

response_code = 400

response_body = {"application/json": {"body": json.dumps(body)}}

# Print the response body to the logs

print(f"Response body: {response_body}")

# Create a dictionary containing the response details

action_response = {

"actionGroup": action,

"apiPath": api_path,

"httpMethod": httpMethod,

"httpStatusCode": response_code,

"responseBody": response_body,

}

# Return the list of responses as a dictionary

api_response = {"messageVersion": "1.0", "response": action_response}

return api_responseIdeally the LLM must first call instruction APIs to choose the right instruction and then invoke the “get_conversation” API to fetch the conversation history given the conversation id and consequently create the final response. In order to make sure the agent follows the exact order you need to provide the instructions when defining the agent. Here are the instructions I provide to the agent:

you are an AI summarizer assistant that helps users to answer their questions. first find the appropriate instruction from the summary_gen_tool or sentiment_gen_tool or other_question_gen_tool functions before running another function. if the question is not about the sentiment or summary of the conversation then use instruction provided by other_question_gen_tool. remember you can only use retrieved instructions from the above 3 provided functions.

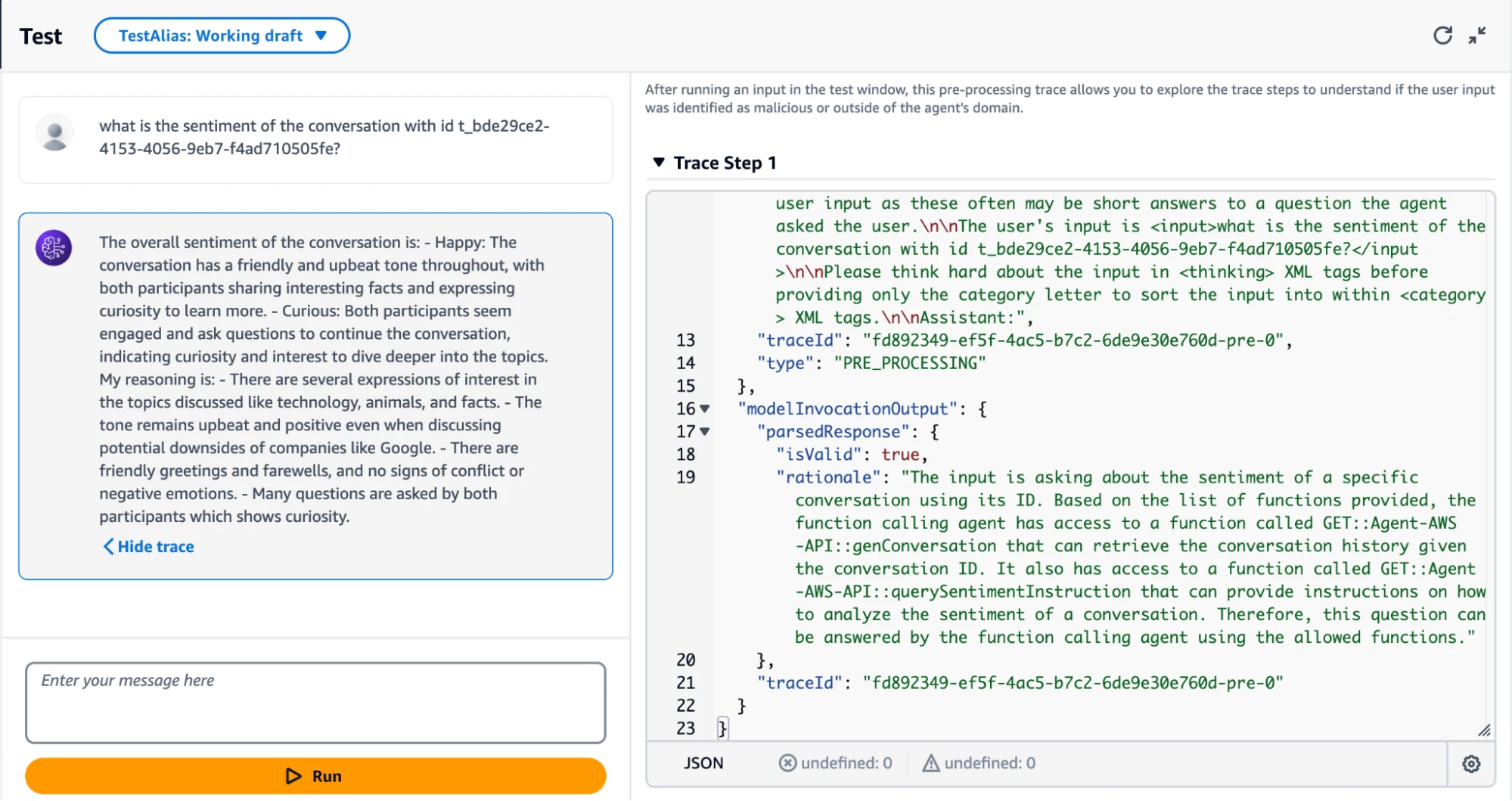

Let’s test our agent with the same questions we used to test our function calling logic. I will showcase one question here but you can test it with other 2 questions to verify the desired behavior of our agent.

Here I ask the following question from my agent: “what is the sentiment of the conversation with id t_bde29ce2-4153-4056-9eb7-f4ad710505fe?”

In the first call our agent correctly invoked '/get_sentiment_instruction' API from the available options given the user question which is about the sentiment of the conversation.

{'messageVersion': '1.0', 'actionGroup': 'Agent-AWS-API', 'agent': {'alias': 'TSTALIASID', 'name': 'test-agent', 'version': 'DRAFT', 'id': 'IJ4PSGSCBO'}, 'inputText': 'what is the sentiment of the conversation with id t_bde29ce2-4153-4056-9eb7-f4ad710505fe?', 'sessionId': '131578276461585', 'sessionAttributes': {}, 'promptSessionAttributes': {}, 'apiPath': '/get_sentiment_instruction', 'httpMethod': 'GET'}

Response body: {'application/json': {'body': '"Pay attention to the provided conversation history while answering the questions. Make sure you have sufficient data to back your reasoning when answering the question. The identified sentiment must be taken from these following categories: Angry, Fearful, Happy, Neutral, Sad, Disgusted, Surprised, Curious to dive deeper. A conversation may contain a mix of sentiment from the categories. Also make sure to provide reasoning behind your decision in bullet points"'}}In the second call agent has successfully identified '/gen_conversation' API after collecting all the required info and populating the parameters (question, instruction, and conversation id). After invoking the API the conversation history is retrieved along with other required info to invoke Claude endpoint.

{'messageVersion': '1.0', 'actionGroup': 'Agent-AWS-API', 'agent': {'alias': 'TSTALIASID', 'name': 'test-agent', 'version': 'DRAFT', 'id': 'IJ4PSGSCBO'}, 'inputText': 'what is the sentiment of the conversation with id t_bde29ce2-4153-4056-9eb7-f4ad710505fe?', 'sessionId': '131578276461585', 'sessionAttributes': {}, 'promptSessionAttributes': {}, 'apiPath': '/gen_conversation', 'httpMethod': 'GET', 'parameters': [{'name': 'question', 'type': 'string', 'value': 'what is the sentiment of the conversation with id t_bde29ce2-4153-4056-9eb7-f4ad710505fe?'}, {'name': 'instruction', 'type': 'string', 'value': 'Pay attention to the provided conversation history while answering the questions. Make sure you have sufficient data to back your reasoning when answering the question. The identified sentiment must be taken from these follwoing categories: Angry, Fearful, Happy, Neutral, Sad, Disgusted, Surprised, Curious to dive deeper. A conversation may contain mix of sentiment from the categories. Also make sure to provided reasoning behind your decision in bullet points'}, {'name': 'ConversationId', 'type': 'string', 'value': 't_bde29ce2-4153-4056-9eb7-f4ad710505fe'}]}

Response body: {'application/json': {'body': '{

"conversation": [

{

"message": "Are you a fan of Google or Microsoft?",

"agent": "agent_1"

},

{

"message": "Both are excellent technology they are helpful in many ways. For the security purpose both are super.",

"agent": "agent_2"

},

//// TRUNCATED CONVERSATION /////

{

"message": "I heard that too. Well, it was nice chatting with you. Have a good day. ",

"agent": "agent_1"

}

],

"question": "what is the sentiment of the conversation with id t_bde29ce2-4153-4056-9eb7-f4ad710505fe?",

"instruction": "Pay attention to the provided conversation history while answering the questions. Make sure you have sufficient data to back your reasoning when answering the question. The identified sentiment must be taken from these following categories: Angry, Fearful, Happy, Neutral, Sad, Disgusted, Surprised, Curious to dive deeper. A conversation may contain a mix of sentiment from the categories. Also make sure to provide reasoning behind your decision in bullet points"

}'

}

}In the final step the agent has invoked Claude model to create the final answer as presented in the below screenshot. On AWS Console you can also show the traces of steps the agent is taking, review the prompt it is using as well as its reasoning to fulfill the user’s request. The agent has successfully identified the two available tools Agent-AWS-API::genConversation and Agent-AWS-API::querySentimentInstruction to answer the questions.

This post presented a creative method for intent identification within chatbot applications, harnessing the power of Bedrock agent and Anthropic Claude's function calling capabilities on Bedrock. One of the main observation we had between the two methods is that Agents will abstract away a lot of things for us such as creating the prompt, parsing output, adding the tools to the agent’s toolbox, and more importantly the iteratively invoking LLM unlike the function calling method in which you had to implement those logics yourself. Moreover, the ability to define tools as APIs based on OpenAPI schema in the form of JSON or YAML files makes the process more structured and a better option for production.

We demonstrated a way to take the complexity of authoring the best prompt for the task away from the user and move it inside the application logic. This potentially can lead to dynamic and responsive chatbots that excel in understanding and fulfilling user intents, ultimately enhancing the user experience within conversational interfaces. You can find the code for this post here.

Ali Arabi is a Senior Machine Learning Architect at Caylent with extensive experience in solving business problems by building and operationalizing end-to-end cloud-based Machine Learning and Deep Learning solutions and pipelines using Amazon SageMaker AI. He holds an MBA and MSc Data Science & Analytics degree and is AWS Certified Machine Learning professional.

View Ali's articlesLeveraging our accelerators and technical experience

Browse GenAI Offerings

Caylent Catalysts™

Learn how to improve customer experience and with custom chatbots powered by generative AI.

Caylent Catalysts™

Accelerate your generative AI initiatives with ideation sessions for use case prioritization, foundation model selection, and an assessment of your data landscape and organizational readiness.

Explore the newly released Claude Sonnet 4.6, Anthropic's best general-purpose model in terms of price-performance.

Explore the newly launched Claude Opus 4.6, Anthropic's most intelligent model to date.

AI agents represent the next evolution of APIs, but they also bring new security challenges and attack vectors. Examine real-world adversarial threats and learn defensive strategies in this blog.